Issue #51 – Key Considerations for AI Governance

If you want to use AI, you need to govern it strategically; take 15 minutes to understand how

Read time: 15 minutes

There is something nobody is telling you about AI.

It’s that most large, enterprise organisations are merely dipping their toe in the water when it comes down to it.

Sure, some may have a few AI POCs, a data science team building models, a helper chatbot or “AI-embedded” SaaS tooling.

But many of these businesses still won’t even consider the use of AI. Why?

Because it lacks governance!

I’ve worked with my fair share of large organisations, and a lot of them have been around a long time. Most are global, they all have a lot of sensitive personal data, and their operational systems are built on legacy tooling/ technology.

All of these things spell danger when talking about org-wide AI rollout.

And while organisations say they are building AI, it’s more of a mixed bag. Enterprise organisations like these aren’t ready to operationalise AI at a large scale unless it is a highly secure tool. Many companies don’t even allow workers to use ChatGPT, Claude, or other AI LLMs due to security risks.

So, AI lives in small, cornered-off teams that aren’t connected to the main customer data or mission-critical technologies.

I mention all this because it underscores a huge point that AI influencers and startups are forgetting: widespread adoption of AI won’t happen until large, slow-moving and conservative companies can trust it!

And a key part of doing that is building AI Governance that prioritises value while ensuring compliance. Welcome to part 2 of my AI Governance thought exercise!

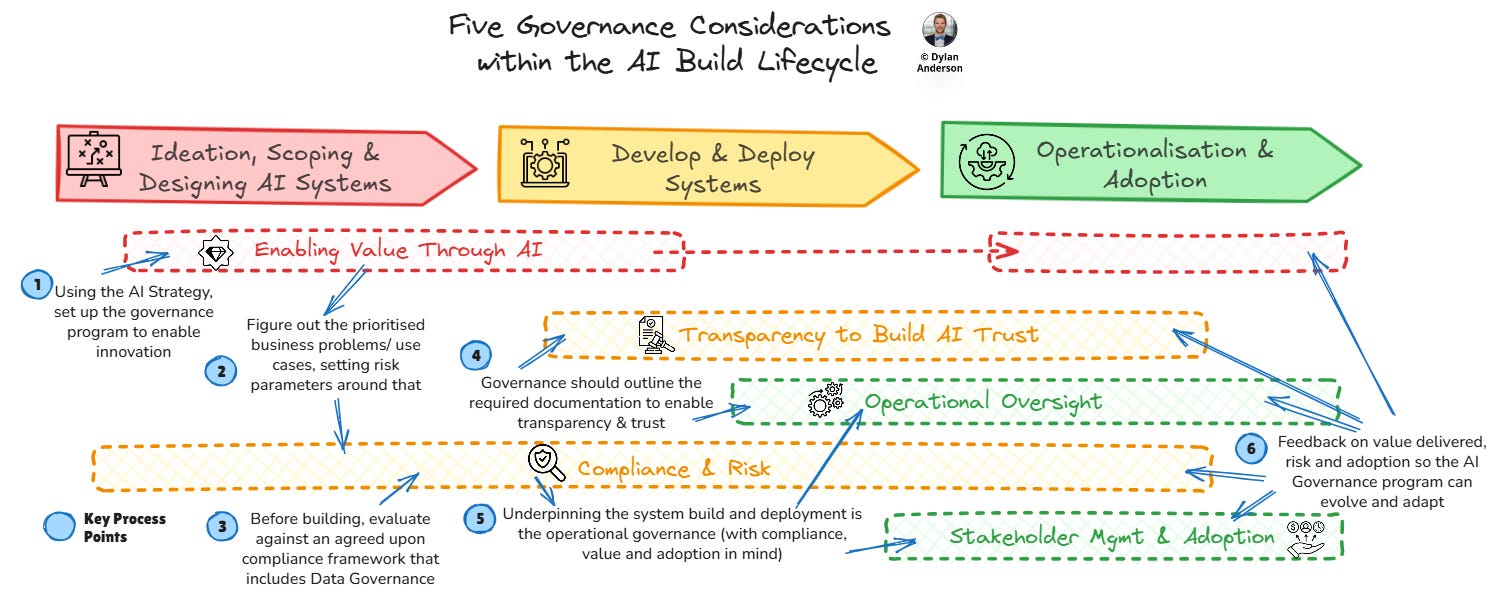

Five Considerations for AI Governance

In the last article, we defined AI Governance:

AI Governance is the comprehensive framework that ensures AI systems are developed, deployed, and operated to capitalise on the organisation’s objectives and drive insight/ action from its underlying data, while maintaining high ethical, security, transparency, and regulatory standards

But what does this mean in practice? What do you need to think about to make that a reality? How do you approach it strategically?

Well, five categories need to be taken into consideration within your AI Governance framework:

Enabling Value through AI

Transparency to Build AI Trust

Operational Oversight

Compliance & Risk

Stakeholder Management & Adoption

1) Enabling Value through AI – What are we trying to get out of our AI tooling/ plans, and what does that mean for its governance?

The first thing companies need to internalise about AI governance is that it isn't about constraining innovation. I, instead, argue it's about ensuring AI initiatives are set up to enable scalable, innovative solutions that deliver measurable business outcomes.

This means not approaching AI governance from a compliance angle, but starting from what AI can and should do in your organisation.

This means asking the fundamental question: what business problem are we solving?

What this provides is a roadmap of the highest priority use cases and reasons for AI’s existence. From that, you can define parameters and guardrails for how governance enables those outcomes in a safe, compliant, and structured way. If you have an AI Strategy that might do the trick to get you started!

There are a few components of this to keep in mind:

Clear use cases with quantifiable value, usually in the form of success metrics/ goals

Business stakeholder involvement to workshop these perspectives together

ROI frameworks that balance governance requirements against expected benefit/ value

Assessing whether AI solutions are actually better than simpler alternatives

With these things in mind, your governance program (and its underpinning processes) treats AI as a value-enabling tool that needs to be appropriately managed, not as a compliance risk that prevents the pursuit of AI. This is only a portion of AI governance, but leading with value makes sure all teams are on the same path with similar goals.

2) Transparency to Build AI Trust – How does the AI product work and make decisions? How can we trust its output?

Transparency within AI extends beyond just understanding how the system works or whether there is bias in its decision-making. The true purpose of AI transparency is building trust.

Of course, the trust aspect isn’t technical, nor does it align with the classic ‘build fast and break things’ mindset.

AI systems won’t be used or adopted by humans if they don’t trust their outputs and understand what it is doing. Components of this include:

Data feeding into an AI system

Features influencing model predictions

How algorithms weigh different inputs

High-level overview of why specific decisions were reached

Testing and monitoring to identify and address inherent bias or discriminatory patterns (especially in industries/ scenarios where imperfect data or easy mistakes may be made)

The user doesn’t need to understand everything about the AI product, but each use case should be assessed by how transparent the system should be and the business consequences of that. For example, if an AI system denies a loan application or flags a resume for rejection, stakeholders need to understand the reasoning for both fairness and legal compliance reasons.

Moreover, transparent documentation and logic help with regulatory oversight. Or transparency makes it easier to adapt the product if it needs to evolve to take on new data, information, or use cases. This will all become increasingly important as newer AI products start to take the human out of the loop.

3) Operational Oversight – How do we manage AI systems throughout their entire lifecycle from development to retirement?

One thing people don’t realise about AI products is that they aren’t standalone tools; they are holistic systems. Anybody who knows technology understands that systems make things 100x harder. For example, unlike traditional software, AI systems are dynamic and multiple components will degrade over time as real-world conditions change (e.g., data quality, model context, system parameters, tech architecture, etc.). Therefore, operational oversight needs to be baked into the system governance to ensure these systems remain reliable and effective.

This idea extends beyond monitoring technical performance. Instead, governance policies need to consider maintaining business value, dealing with compliance and regulatory risks, and the system performance (e.g., data and technology). Some high-level things to keep track of include:

Data quality monitoring to ensure training and inference data remain representative and accurate

Model performance tracking to detect when algorithms start making worse decisions

Version control and rollback capabilities when model updates cause problems

Automated alerting when the AI system's behaviour deviates from expected patterns

Regular retraining schedules based on new context, data drift, regulatory changes or performance degradation

Model retirement when systems or tech become obsolete, change, or problematic

For most organisations, AI systems are built in a black box, so when they fail or degrade, it doesn’t immediately trigger warnings to users. And worse, by then, a lot of users will come to trust whatever comes out of the system. Operational governance provides the frameworks to catch these issues before they impact business outcomes or create significant risks.

4) Compliance & Risk – How do we manage AI-specific risks while meeting evolving regulatory requirements?

Don’t worry, I didn’t forget about compliance and risk.

This is the most significant topic on the minds of large organisations designing and implementing AI governance. There are a lot of things to consider here, more than Data and IT governance can handle. And remember what I said above?

AI is a system, so you have to think about compliance and regulatory risks in tandem—technology will impact data, which will impact models, which will impact your AI, etc.

So without getting into too much detail, here are eight critical areas of compliance and risk management when it comes to AI that you have to consider:

Data Governance & Privacy: AI starts with data, so naturally, our governance model should begin by ensuring AI training data and outputs comply with privacy regulations like GDPR. Without governance designed into the systems, Gen AI can inadvertently expose personal information, or data may be retained within an AI system when it shouldn’t. Components to think about include governing data anonymisation (potentially in pipelines), retention policies, and consent management.

Regulatory Compliance: First, determine how your AI system matches up against local/ relevant regulations (the Future of Life Institute built a compliance checker for the EU AI Act). Then, evaluate the relevant regulatory components (which may be unique to your industry) and embed those into your compliance guidelines. Remember, all this is to avoid significant fines that will be handed out!

Technology & Tooling Compliance: AI is going to be plugged into other tech and tools, so you need to make sure these tools, platforms, and infrastructure meet security and compliance standards throughout the AI lifecycle. Think about data protection capabilities, model registries, version control, access management, compliance monitoring within CI/CD pipelines, and secure environment setup for AI experimentation.

Algorithmic Fairness & Bias: This builds on the transparency category, but part of risk and compliance means monitoring bias and fairness within the AI algorithm/ model. The goal here is to prevent discriminatory outcomes, particularly critical for high-risk applications (e.g., hiring, lending, etc.) that can cause significant legal and reputational damage.

AI System Security: Remember, AI is a system? This means you need to have security against threats at multiple touchpoints from many angles. AWS provides a comprehensive lifecycle risk management approach, attaching threats like prompt injections, DoS, and privilege modification to parts of the AI lifecycle. In the end, this links to other categories and is about keeping your system safe from external hacking or malicious intent.

System Documentation: If an AI tool is being implemented at scale in an organisation, it will require documentation to demonstrate that the system is compliant. From a governance POV, those compliance requirements may shift, but documents like model development approach, training methodology, access logs, performance metrics, decision logs, etc., should provide the required information for any audits or impact assessments.

Third-Party Risk: An underappreciated category is how third-party vendors and external data sources are using AI. Do their systems harbour vulnerabilities? How should they use your AI systems to remain compliant? Including third-party governance rules on data storage, management, AI development, etc., is essential, especially since a lot of AI dev or tooling is coming from vendors.

Ethics: Not really compliance or risk, but as AI evolves, companies need to consider ethics. So having a framework that defines acceptable use cases for AI and prohibits harmful applications is essential (you don’t want that type of thing coming back to haunt you down the line).

That was a lot of information, but the key insight is that AI compliance is a multifaceted beast and needs to be approached from multiple angles. If you have all this laid out, it makes pursuing ambitious AI projects that much easier because you’ve considered the risk already.

AI compliance isn't just about avoiding penalties—it's about building sustainable competitive advantages. Organisations with robust compliance frameworks can pursue ambitious AI projects that competitors can't risk. They move faster because they've already addressed the governance questions that slow down others. Compliance becomes an enabler of innovation rather than a barrier to it.

5) Stakeholder Management & Adoption – How do we make sure AI is adopted and used in a responsible way?

A huge piece of any governance domain is change management. This is not said enough and is often ignored by leadership when they roll out AI or any governance plans. After all, there is an optimal balance between adopting and governing new technology & tooling because it is useful vs. because your boss told you to. And we are starting to see both sides of the coin with AI.

That is why, within your AI governance, you need to consider the cultural change.

Because let us be clear, AI is rapidly changing the culture of work, from how we work to our expectations of employees to the risk parameters in what we do.

So rather than top-down mandates, governance should be created in tandem with teams using the tooling to ensure they follow the rules while learning and leveraging AI. What might this entail?

AI Literacy Programs: This training should encompass a combination of what teams can do with AI and why responsible AI usage matters. Make it about their success, not just compliance requirements

Hybrid Governance Oversight & AI Champions: Just like your data owners, set up governance advocates/ experts within development teams rather than relying on a centralised compliance function. I see this as a hybrid approach with a centralised team and federated champions within dev/ BAU teams

Workflow Integration: Building governance into existing AI development processes is crucial! This should flow from sourcing to usages

Value Communication: Demonstrating to users how governance enables innovation rather than constraining it. This is also important for executive communications and an eventual audit trail

Continuous Feedback: Honestly, feedback channels exist in any form of change management. Allow for recommendations from developers and users to improve governance based on practical experience

Product Led: We will see the AI Product Manager role grow, and it is these individuals who should manage this perspective, ensuring the consideration of governance, compliance and responsible usage in all AI development/ adoption

Remember, adoption and usage are the end goals for any AI product/ tool. Therefore, the organisation needs to think about AI adoption and usage in this dualistic way—built to provide value, but with governance principles embedded in the process. Don’t overlook it!

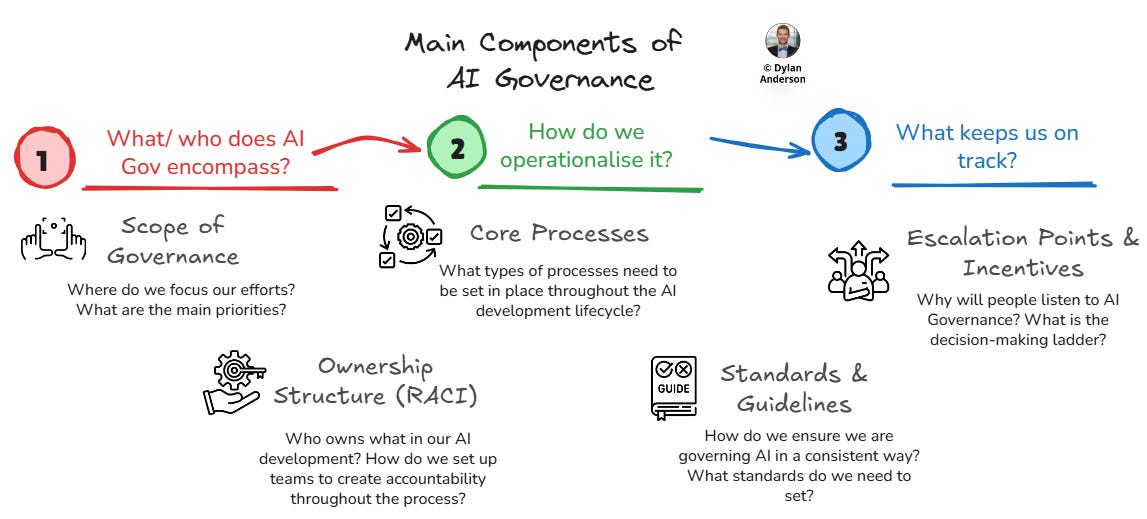

Components of a Governance Framework

Okay, so now we have all the considerations you need to think about when it comes to AI Governance, but we still lack the underlying framework.

This involves a customised approach within your organisation. You need to translate these five considerations into a structured operating model with clear ownership, processes, and escalation points that accelerate rather than hinder AI development.

Let’s dig into the components of what you need in an AI Governance framework:

Scope of Governance

First, it’s imperative to set what your AI Governance practice will focus on to create clear boundaries and prevent scope creep, ensuring you focus limited resources on high-impact areas. There are two elements to this:

One is to have a clear "what we do/ don't cover" document to prevent confusion and enable the governance team to focus on delivering value in the right areas.

The other bit is to prioritise the scope of what is done, which should be an ongoing activity with key AI stakeholders (potentially in an AI Council format).

The scope should align with business priorities, starting with AI systems posing the highest risk or delivering the most value, then expanding coverage systematically.

Ownership Structure (RACI)

Ownership within the AI ecosystem is going to be a tricky beast. One thing you can start with is a RACI framework (Responsible, Accountable, Consulted, Informed) and roles to oversee AI Governance.

If you are to set up an operational structure, I would recommend a hybrid centralised-federated model similar to Data Governance but adapted for AI's unique complexities. This may include an AI Governance Office to provide centralised coordination and expertise, an AI Governance Council with senior leaders to make key decisions and define roles for AI Product Owners, Champions, and Stewards. Of course, you also have the AI Engineering and Development Teams to implement changes and embed governance into AI development. Include these roles in your RACI for key governance processes.

The key difference from Data Governance: AI ownership will require deeper technical understanding due to algorithmic complexity, making these less suitable for side-of-desk roles.

Core Processes

The next significant component is ensuring the governance framework outlines key processes spanning the AI lifecycle. In my mind, this involves five categories:

AI System Assessment & Approval to standardise how teams evaluate the value, risk and compliance requirements, while aiming for streamlined approval for low-risk use cases.

Model Development & Testing to integrate governance checkpoints, including data validation, bias testing, and transparency documentation.

Deployment & Monitoring to consider all dependencies when moving to productionisation and tracking the live model for drift, performance, and compliance adherence.

Lifecycle Management processes to oversee versioning, retraining, retirement, and any model evolutions.

Adoption & Rollout processes to get buy-in across teams, while embedding user requirements and feedback throughout the AI tool lifecycle.

There are definitely other processes that need to exist within your AI Governance framework, with flexibility being the key consideration.

Standards & Guidelines

Unlike data standards, AI standards must be adaptive. As the business context changes or the AI regulatory landscape evolves, so will the standards or guidelines to set those standards.

The areas these standards apply to may include:

Acceptable use cases

Data quality requirements

Model documentation guidelines and requirements

Testing and training procedures

Data and platform security & privacy requirements

Transparency levels

Escalation Points & Incentives

Finally, there should be a ladder of decision-making. Escalation points of when to bring it to the AI Governance Council should be clear to any owners/ stewards. The interaction of decisioning with DG should also be outlined.

From the incentive side, many of these roles may require additional time or knowledge, so factors such as pay, extra training, or responsibility should be appropriately considered. Incentives might reward responsible AI usage, with governance metrics tied to performance evaluations.

How to Implement AI Governance

With the final section of this article, I wanted to touch on implementation. If you haven’t read it, go look at my article on Data Governance implementation, as it will cover more detail than this will get into (much of it very relevant for AI Governance).

For example, I would recommend you mimic the five necessary components I set out for DG implementation. I included the graphic below, so you can see the parallels between DG implementation and AI.

In the end, the main goal of any governance program is to start strategically, proving value quickly, scale systematically, and show that governance enables rather than constrains innovation.

So instead of repeating myself from my last article, I will give a high-level, phased approach to a hypothetical implementation of an AI Governance team, from strategy to execution. Consider this a plan-on-a-page, which is something you would see to kick off a consulting project or proposal (if you were ever interested…):

Phase 1: Foundational Framework & Identified Quick Wins

Successful AI Governance implementation means starting strategically, proving value quickly, and scaling systematically. This means starting with what exists or what could exist (and deliver value) quickly.

Begin by mapping the current AI landscape (e.g., existing use cases, third-party tools, shadow AI usage). This creates a foundational view of existing risks and helps scope the program around real compliance requirements and business priorities.

With that in mind, define the program goals in coordination with business stakeholders and leadership. Then build a basic framework that includes a high-level ownership structure, core processes, standards, and escalation points. This framework should focus on 2-3 strategic, quick-win governance priorities that showcase the program’s value.

Phase 2: Ownership Set-up & Process Integration

With governance deployed to initial use cases, it is time to roll out ownership assignments and embed additional checkpoints into additional AI project workflows.

Using the foundational framework as a base, work with relevant stakeholders to create a hybrid governance model. This might include identifying and training AI Champions, tool owners, and governance council members who will become key governance enablers within their teams.

The processes are the next step. This means building on the foundational ones to make it a bit more comprehensive. It also includes implementing monitoring and feedback loops to measure the impact of governance. A considerable part of this is a focus on change management to help teams understand how to use AI responsibly and how the governance program addresses specific compliance risks.

Phase 3: Streamline Governance Maturity to Enable Innovation

With the AI Governance program built with a strong foundational framework and beginning to deliver on core use cases, the next step is to increase maturity to enable future AI initiatives. Evaluate future AI use cases, upcoming regulatory requirements and longer-term organisational goals (which will no doubt involve AI) to ensure governance frameworks can accommodate emerging technologies and business needs.

Then assess program maturity and organisational AI adoption to identify optimisation opportunities. This may include remodelling AI development processes with governance principles embedded from the start. Or maybe creating a more joined-up data and AI governance team/ engine.

Finally, continue to prioritise creating trust in AI across the organisation. Getting value from these tools is dependent on organisational-wide adoption in a responsible, compliant manner.

Concluding the Governance Chapter

Maybe we, as humans, get the wrong idea of what governance means because of how incompetent most governments are across the world? Or is it due to the underinvestment or the cumbersome nature it gives off within our organisations?

Either way, as you can see from my series on Data and AI Governance, this perspective is outdated; instead, governance in the Data & AI world should be about value-enablement and creating competitive advantage. Organisations that do governance right have a stronger foundation, allowing them to pursue ambitious data & AI initiatives that others can't (this may be due to data quality, operational or risk factors).

In the end, I implore organisations to innovate, but innovate responsibly.

As AI becomes more regulated and stakeholder expectations rise, organisations without governance capabilities will find themselves scrambling. This is especially true as businesses change how they operate and deliver value with AI in mind.

Speaking of that, my next article takes a turn back to my favourite foundational components—the business model. We will define what it is, explore its importance, and understand how data & AI are changing it. Until then, have a great Sunday and rest of the week!

Thanks for the read! Comment below and share the newsletter if you think it’s relevant! Feel free to also follow me on Substack, LinkedIn, and Medium. See you amazing folks next week!

This is really good! A big topic with a lot to cover, but you pulled it off, succinctly. Such a useful list of critical components for different angles and stages and governance. I like how you've been simple and straightforward with your writing. I'll be returning to this over and over again.

Nice piece Dylan. This sounds nearly identical to advanced analytics governance, particularly in government organisations. How do we validate evidence from any source including data, analytics, LLMs, AI relevant to any given issue? Do we stop validating when these often automated technologies support our hypothesis? How do we account for bodies of evidence outside of these technologies, ie towards the “unknowns” group? Are the “unknowns” in this context only unknown because we are not looking at relevant data or information sources? Cheers