Issue #50 – Evolving from Data to AI Governance

Don't make the same mistake with AI Governance; we need to approach it differently to DG

Read time: 11 minutes

I’ve never seen anything move as fast as AI.

Every day you get new models, use cases, unicorn startups, courses, and everything else that has come with the AI hype.

From an innovation perspective, this is fascinating and exciting!

But from a governance perspective, this is terrifying!

Why? Because you can’t govern what you don’t understand!

And let me tell you something: nobody truly understands AI.

This manifests itself in many different ways:

Monitoring how employees use Large Language Models like ChatGPT, Claude, Gemini, etc.

Understanding what your new ‘AI-enabled’ SaaS tool is actually doing with AI (is it stealing and learning of your private data???)

Building internal AI tools against identified use cases

Plugging AI tools into your organisation’s data without clarity on the security, privacy, compliance and regulatory consequences

Imagine if you were thinking about these things every day. Well, AI doesn’t sound too exciting anymore; it sounds like a never-ending headache (especially since new tooling, models, and ideas come out on a daily basis).

And I say all this on the back of another stark truth: most companies don’t even have Data Governance properly set up!

Having just written three articles on Data Governance, I’m seeing a lot of the same mistakes with AI Governance that we saw with DG:

Addressing it from a compliance perspective

Massive underinvestment from leadership

Getting people who don’t understand Data or AI to lead it

Not looking at the potential of AI, just the risks involved

More than that, AI is advancing at 10x the speed data did. When 2012 was the year of Data Science, we were still figuring out what that meant ten years later. Almost three years after the launch of ChatGPT, we have introduced more terms and types of tooling/ models than I can list off (and people still don’t understand half of them).

There is no doubt in my mind, AI is here to stay. But, if we want to use it properly and take advantage of its potential, we need it to be governed and avoid repeating the mistakes we made with data governance (which, by the way, is still incredibly important in today’s world).

So let’s dive in. In this article, we will explain the need for AI Governance and define what it should entail. And in the following article, we will dig into the different components to keep in mind and how to implement them.

The Organisational Approach to AI

You can probably think of dozens of reasons for AI Governance. But I’m not going to focus on those just yet.

First, I want you to think from an organisational perspective. You are a CEO, you hear about all these possibilities with AI. At the same time, you are considering the potential negative consequences for the business if something goes wrong. What do you do?

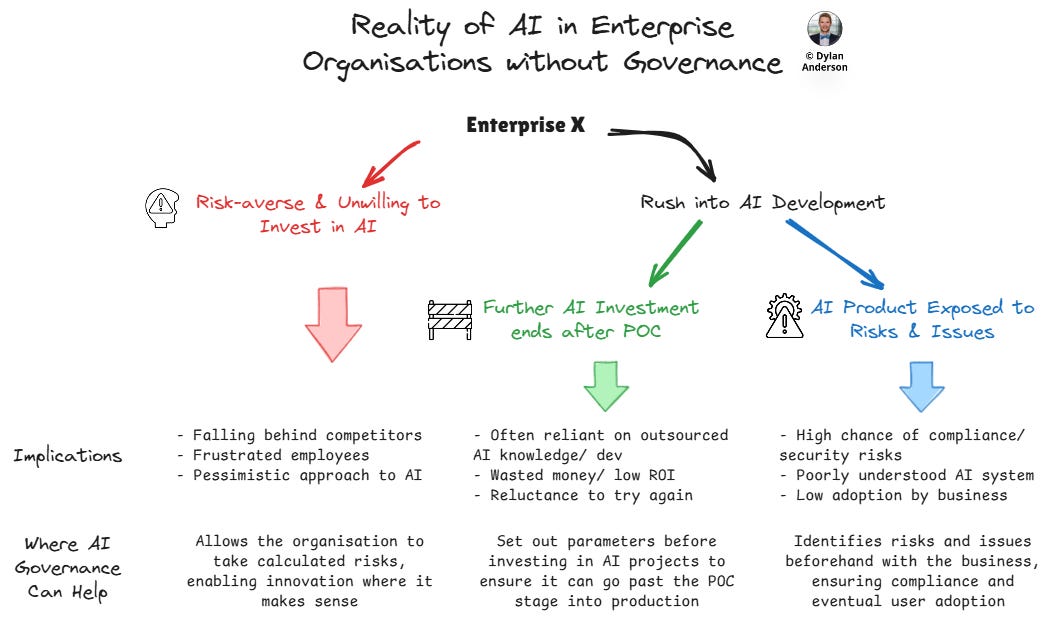

Well, instead of approaching it with well-structured governance, most organisations fall into two detrimental categories:

They become so risk-averse they miss AI's transformational potential

They rush headlong into AI without considering its implications (either internal or external)

I’ve seen lots of large organisations in the first group. They are usually wary of data privacy and security risks and often don’t have a clear strategy on how they want to get value from AI. This means new ideas/ use cases get stuck in committees or approval processes that take 4-6 months, ensuring nothing gets deployed. Teams may want to use it, but are resigned to the fact that they can’t, prohibiting any innovation in the space. Here, if governance exists, it is an inhibitor to progress.

The second group of organisations tend to be smaller in size or more data/ tech-focused. Here, different departments experiment with AI tools, but without coordination and proper oversight. This often leads to a patchwork of ungoverned systems that can't scale/ integrate, or decisions that are made on horribly governed data. Worst case, this leads to public issues like compliance violations, discriminatory decisions via algorithmic bias, data breaches, reputational damage, etc.

Both situations aren’t ideal, which is why everybody is starting to talk about AI Governance.

Organisations (and people) understand they need to use AI, and to do that effectively there has to be some sort of governance involved, especially since it is such a fast-evolving and poorly understood domain.

So as AI becomes more regulated and stakeholder expectations rise, organisations that haven't built foundational governance capabilities will find themselves scrambling to catch up, while competitors with mature frameworks keep building and scaling.

Now I should say, there are a lot (A LOT) of companies doing really cool things with AI, but there are also a lot that aren’t. Not to mention, despite the unknown consequences of AI, every organisation across every industry is rushing to implement these solutions.

That may be a customer service chatbot, AI agents, internal RAG tools pulling proprietary documentation, fraud detection models and any other use cases thought up by an expensive consulting firm or an ambitious leadership team.

And that brings us to more of the specific need for AI Governance in every organisation (which takes a similar approach to the above).

The Need for AI Governance

Many companies are building AI POCs, but most lack proper governance.

And given how many companies are heading down this path, there is a dangerous gap between AI development and oversight. This leads to two outcomes:

The POC goes nowhere because the proper controls and compliance areas were not thought of and it can’t be productionised in the org-wide environment with proprietary data

The AI product is released and deployed, but is exposed to potential issues from algorithmic bias, privacy violations, non-transparent outcomes, regulatory violations, or other issues

In a world where you can’t ignore AI and you need to use it to remain competitive, both of these scenarios are problematic.

The first outcome mimics the risk-averse mindset mentioned above. Sometimes that leads to a lot of wasted money on externally POCs that never would have been approved had senior stakeholders known about them. Or worse, your company decides to build its own contained AI tool to overcome compliance risks. It ends up costing millions of dollars and delivers absolutely no value (I’ve seen this in a few large consultancies/ audit firms…).

In the second outcome, your AI implementation may create business value, but also cause ethical and security risks that the organisation only realises after it's too late. This may include ethical biases in hiring or the risk of data breaches and hacking. A couple of great examples are a McDonald’s AI Hiring Bot that exposed millions of applicants’ data to hackers and a Chevrolet car dealership getting tricked into offering cars for $1. These scenarios are just the tip of the iceberg (I doubt we hear half of the issues that happen), and have a significant impact on ethics, compliance and organisational reputation.

These two reasons alone outline the reason why organisations need to consider AI governance.

But these reasons aren’t all you have to worry about; if you are a global business and operate in Europe, the EU AI Act is coming (whether you are ready or not)…

The EU AI Act is the world’s first comprehensive AI legislation, which entered into force on August 1st, 2024, with plans for it to be fully applicable on 2nd August 2026. With an aim to foster trust in AI across Europe and build on legislation like GDPR (which protects personal data), the Act “sets out a clear set of risk-based rules for AI developers and deployers regarding specific uses of AI”.

Unsurprisingly, the big AI firms (OpenAI, Meta, Google, etc.) have urged the commission to delay the act by two years. And while governments are well-known for bowing to corporate interests, obligations to address issues in high-risk models are still on track to start in August 2026.

Given that the Act applies to any organisation whose AI systems are used within the EU, even US companies offering services to European customers must comply. If a company does not comply, penalties can reach up to €35 million or 7% of global annual turnover for the worst offenders, which exceeds the maximum fines for GDPR. Even incorrect, incomplete, and misleading information can incur penalties of up to €7.5 million. To address this, companies will require inventories of AI systems, implement risk management frameworks, establish human oversight, and create detailed documentation for all AI applications.

So the wild west of AI is catching up to companies, and it may not be a question of risk tolerance or potential model issues; it may just be a question of “do you want to do business in Europe?”

On the other hand, the US is taking an opposite approach to this, which may create disparities in development and delivery. But given EU requirements, any large AI models active in global organisations will likely still need to be appropriately governed.

What AI Governance Actually Is

Well, that gets us to the key question here: “What is AI Governance?”

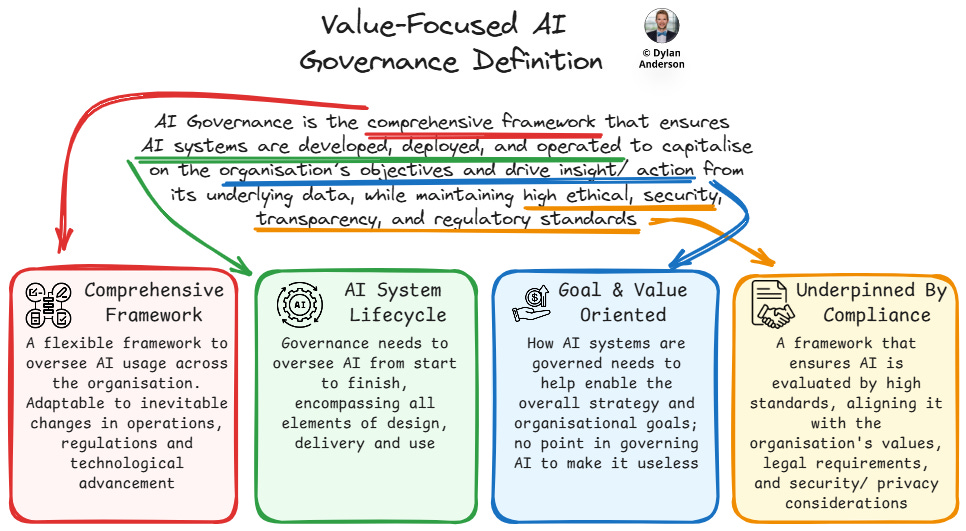

When people think about AI Governance, they are probably picturing a similar compliance role that is often portrayed for Data Governance. Something like: "processes, standards and guardrails that help ensure AI systems and tools are safe and ethical, while fostering innovation and building trust."

While I don’t disagree with those elements, taking that definition risks slotting AI Governance as a compliance activity, which will inevitably slow the progress of innovation and limit the potential of AI.

So here is my definition:

AI Governance is the comprehensive framework that ensures AI systems are developed, deployed, and operated to capitalise on the organisation’s objectives and drive insight/ action from its underlying data, while maintaining high ethical, security, transparency, and regulatory standards

Okay, this definition isn’t exactly super different from most you will find out there, but there are four components to it that I want to call out:

Comprehensive framework – I have no idea what AI Governance will look like in two years, and neither do experts. But what I do know is that you need to make it flexible to any change; frameworks are good at that. Whether it is processes, ownership, standards, etc., that are embedded into that framework, the thing that matters is how that framework adapts to the needs and realities of AI within your organisation.

Developed, deployed and operated – Governance is not about deciding whether a project should start or not. Especially with AI, governance needs to address the full lifecycle from design to delivery to employee adoption.

Capitalise on the organisation’s objectives and drive insight/ action from its underlying data – The primary directive of any AI tool will be to drive value for the organisation. Now ‘business value’ is a watered-down term, so I replaced it with the organisation’s objectives because moving forward, AI will take an increasing role in driving those. And underpinning that has to be the data, specifically the action-oriented insights gathered from that data!

High ethical, security, transparency, and regulatory standards – Of course, we can’t forget about compliance. Organisational ethics and values need to underpin your governance strategy. Anything you build should be secure. Your models need to be transparent to create trust in stakeholders. And finally, everything has to adhere to regulations set by the countries your organisation operates in.

So if we recall my article on defining Data Governance, the definition for AI Governance encompasses value enablement, but while not forgetting about the compliance and risk the domain needs to take into consideration.

Speaking of Data Governance, I can’t end this article without discussing how AI Governance differs from and builds on DG!

Where AI and Data Governance Differ (And Build Off One Another)

In short, AI Governance is not the same as Data Governance, but it builds directly on it.

As we discussed before, data governance should aim to establish the foundational quality that enables organisational data to deliver value. This is done by creating cross-company accountability to ensure the quality of data assets, domains, and sources. Alongside this, you have the compliance, access, and regulatory requirements inherent in managing the data responsibly (but mature governance teams have shifted from compliance-based to quality enablement with compliance in mind).

When comparing the two, it’s a similar relationship that data and AI have to one another: DG ensures that the data required to build AI systems is properly governed, which enables AI governance to guarantee the integrity, quality, and compliance of the system built on top of that data.

As we explored in both our data quality and governance series, you cannot have effective AI governance without solid data governance. AI models are only as reliable as the data that powers them, making data quality, lineage, and ownership critical when that data trains algorithms making autonomous decisions.

But AI governance will expand beyond traditional data governance/ management in many ways:

Pace of Industry Change – This goes without saying and mimics everything I said above—the AI industry is evolving fast and furiously! Can governance keep pace? While data evolved fast, it didn’t change quite like this, giving DG a chance to catch up in companies that wanted to invest in it. For AI, I see this as less likely. Oh, you also need to consider the change in the regulatory landscape too!

Dynamic vs. Less Dynamic Governance – Just like the pace of change, AI assets are very dynamic and constantly evolving. It is not to say that data is static, but DG focuses on information assets that shouldn’t change substantially if products or tools get updated. Meanwhile, AI governance manages algorithms that continuously learn, adapt, and change their behaviour on top of that data, which may also slightly shift.

Algorithmic Visibility & Understanding – Transparency will be a core issue in AI Governance. In DG, it is a problem identifying what data comes from what source systems, but it’s not an impossible task. With AI, teams will need to have visibility into both the data and the algorithm, both of which will be difficult to understand

Broader Implications – While data governance focuses on organisational data management, AI governance must consider broader societal implications. Sure, things like GDPR and data privacy cause headaches for DG teams, but this will be nothing compared to live AI agents that are making autonomous decisions when interacting with customers (all of which the company is legally accountable for).

Domain Expertise Necessary – In DG, we rely on business stakeholders/ teams to make sense of the data they should own. When it comes to AI, this becomes more difficult because it takes a lot more knowledge to grasp what an AI system is doing without a background in data science or AI engineering. Therefore, I don’t see AI owners and stewards as a side-of-desk job for business professionals, as is the case with Data Governance.

As we move forward, we need to think of this governance ecosystem and how DG and AI governance interact. How do we build on what we already have? Where does data impact AI governance? What is the change management required to make this tangible in organisational teams?

There are a lot of questions that I don’t have the answer to (in this article at least). But next week, we will talk about AI Governance from a more strategic angle. So tune in to that and have a great rest of your Sunday!

Thanks for the read! Comment below and share the newsletter if you think it’s relevant! Feel free to also follow me on Substack, LinkedIn, and Medium. See you amazing folks next week!

Great perspective. I would just add that Data Governance is not a purely digital-oriented framework, but rather the discipline that ensures data quality and reliability from the very moment it is created — whether it’s a new customer record, a product code, or a cost center.

In practice, AI teams often spend more than 50% of their time on data cleansing or data enrichment due to inconsistencies flowing from upstream sources. This is why DG is not just about compliance, nor is it an element that slows down AI initiatives. On the contrary, when applied effectively, it becomes one of the most fundamental and strategic allies AI teams can rely on.

I fully agree with you that organizations frequently underinvest in DG. In my experience, this often stems from a lack of understanding: too many DG professionals remain anchored in the theoretical framework and fail to make it operational. Bridging that gap is what unlocks the real value — both for governance and for AI.

Strong AI needs strong Data Governance — not as a brake, but as the engine that keeps it running reliably and responsibly.

Dylan - Your presentations of data governance and AI governance identify the features of a symbiotic relationship between the two. That relationship is prominent in the ETVL process (Extract, Transform, Validate, Load) that brings fresh data into the environment. It's difficult to envision a tool that would be more effective in the "validate" process than AI/ML, which can modify its own rules and references as it works to ensure that the fresh data has useful value. Keep up the good work.

John Boddie