Issue #43 – Approaching Data Quality in Today's Complex Data World

The elephant in the data room, and how to address it with everything going on in our industry

Read time: 15 minutes

Four teams. Four dashboard reports. Four different revenue numbers.

This scenario is all too familiar, with implications damaging data team reputations across industries.

The end result is frustrated executives, scrambling data teams, and short-term, manual band-aid solutions to foundational problems.

It all comes down to data quality, and the challenge of ensuring it in today’s complex data landscape.

Despite organisations investing millions in data infrastructure, quality issues persist wherever you look.

Here are some stats I shared in my article last summer that still magnify the scope of this issue:

Gartner stated in 2021 that bad data quality costs organisations an average of $12.9 million per year

IBM conducted a study that showed data quality issues cost the US economy $3.1 Trillion in 2016, which has certainly gotten higher over the past decade

In 2017, Thomas Redman (writing for MIT Sloan Management Review) estimated that bad data might cost companies as much as 15% to 25% of their revenue

You’d think we were getting better at these things, wouldn't you?

But here's the paradox: We have more data tools, quality frameworks, and attention on data quality than ever before, yet the problems are getting worse, not better.

Why? Because while attention on data quality is growing, it’s only doing so while the overall data industry continues to evolve—and evolve at pace, might I add!

Traditional approaches to data quality are not keeping pace with today's complex data ecosystems.

I’ve wanted to dive into data quality topics like observability, contracts, testing, management and governance for a while. However, before we proceed, I need to address the topic at an overarching level, building on my two articles from last summer—that’s the purpose of this article.

So let’s jump in!

The Underlying Human Challenges to Data Quality

People have been complaining about data quality for as long as I can remember.

But at a fundamental level, organisations make a critical mistake when trying to address it: they focus on symptoms rather than causes.

When a CEO questions why the sales numbers don't match across reports, the typical answer is to a quick fix—update the dashboard, manually correct the data, or add another quality check. And as I covered in my article last summer on data quality, it’s the root causes of quality that cause the problems, and these remain unchecked, ready to strike mistrust in the data after that band-aid has fallen off.

Considering human history and psychology, this makes sense. From a historical perspective, before computers, you manually checked things if they seemed wrong. Then you fixed them. Automation wasn’t really a thing and for business stakeholders who are so used to manually creating reports and checking the outputs, this logic persists.

On the psychological side, when humans encounter a problem, they naturally address it at a surface level because they don’t know how to tackle the deeper root issue. Only experts make that assessment and lay out a plan to solve for the long term. It takes individuals with an understanding of the problem to identify it, and they then invest in an expert to help solve it (think a doctor, contractor, or plumber, although problems in these professions are commonly).

When you combine these elements and add the data and technology component, the complexity snowballs.

A big reason for this is that data quality is not an obvious or clear problem—in fact data quality is defined differently depending on who you talk to.

Of course, there are the obvious issues, such as missing data, misspelt inputs, or huge outliers. However, most of the problems we encounter today are more nuanced (e.g., different formats, outdated numbers, data that appears incorrect, inconsistent spellings of the same term, etc.). And these issues stem from a lack of agreement between business and data stakeholders.

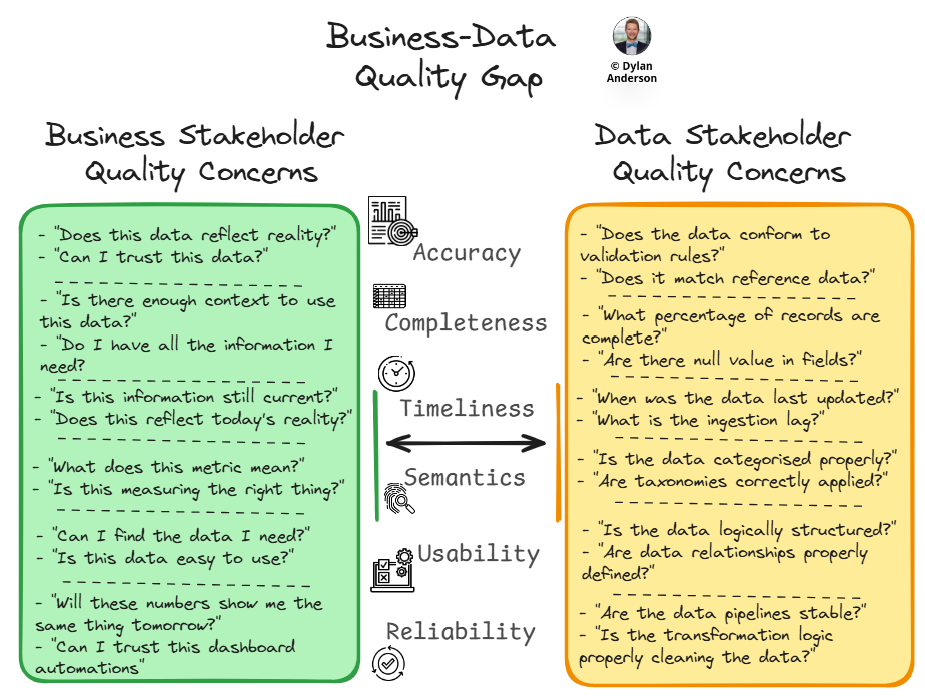

A significant aspect of this issue is often highlighted as a data literacy gap. On the other hand, data professionals usually have a business literacy gap. Brought together, you can call this a business-data gap, where business stakeholders and data teams view quality through different lenses:

Business teams consider quality in terms of usability for their specific use cases. Does the data make sense to them is another way of saying, is it accurate, relevant and reliable? Ultimately, the primary question comes down to either "Can I trust this data to make a decision?" or “What is this data telling me?”

Data teams assess quality from a more technical perspective. When conducting their analysis or piping data through the platform, they tend to focus on completeness, consistency, and minimising errors. This comes down to whether the data breaks the model or meets our technical standards.

This misalignment creates a situation where both sides believe they are addressing quality, but they are actually solving different problems.

In the end, data quality isn't a technical issue—it's a people issue. Without agreement on what "good" looks like, technical solutions will always fall short.

Top 5 Data Quality Issues In Large Organisations

I’ve worked with about 20-30 organisations in the past 3-4 years. For each of them, I get to know their business goals, their underlying tech stack and where they are struggling with data issues.

One area is common amongst them all—their data quality!

As I alluded to above, these problems usually aren’t contained to the technical domain—they stem from fundamental organisational and process challenges. Let's explore the five most common issues:

1. No Standards/Definitions for Key Terms/Data Domains

Every organisation has multiple teams. When you are small, it isn’t challenging to understand the metrics or terms across teams and achieve alignment on what they mean. As an organisation scales, this becomes more difficult. As a large organisation, this becomes impossible without some approach.

Consider a seemingly straightforward term, such as "customer." Marketing, sales and ops might all have different definitions: Is it someone who bought a product? Is it a store that we are selling through? Is it a wholesaler that distributes our products?

Without clear, organisation-wide definitions for key data domains and business concepts, different teams inevitably create their individual interpretations. The result is multiple versions of "truth" that create confusion and undermine trust in data. Data quality will be poor if there is no agreed-upon definition, right? As an estimate, this issue is detrimental to 80-90% of the companies I have interacted with.

2. Increasingly Complex Technology Ecosystem That Doesn't Work Together

With the growth of data comes an increase in complexity. Different tools and platforms across teams. No ingestion principles, leading to silos of data within different technologies. A lack of strategy creates confusion about the overall technology ecosystem/ architecture. Oh and don’t forget legacy systems that weren’t designed for today's interconnected data environment.

Each tool in your organisation has its own role, reason for being, way of handling data, quality rules, and so on. And I’m not just talking about data tools, but the operational systems that keep the lights on. These inconsistencies introduce points of failure where data quality can deteriorate from the source.

Furthermore, with the rapid evolution of data tools, organisations often find themselves adding new technologies without fully retiring old ones. This creates a tangled web of data flows where tracking lineage and ensuring quality becomes nearly impossible. Continuing with my estimate, this challenge affects all organisations I have seen that are older than five years. However, some have invested significantly in architecture and data modelling to mitigate its impact.

3. Accountability for Data Across Systems Does Not Exist

The question of "who owns this data?" is surprisingly difficult to answer. As data moves through various systems and teams, accountability becomes diffused or lost entirely.

Is the source system owner responsible? The data engineering team that built the pipeline? The analytics team that created the dashboard? Or is it the business team that eventually uses the data for their decision-making? The result is that no expert within the organisation can be accountable for the data and the business outcomes it influences. Then (similar to the psychological deferral I mentioned above), nobody takes action, resulting in finger-pointing and band-aid fixes. The accountability gap allows quality issues to grow and multiply over time as data flows through increasingly complex systems.

In case you were wondering, this is why data remains locked up in specific departments or teams… Oh and I would say this is a massive problem for 60-70% of organisations.

4. No Team or Method to Fix Data Quality

If I quote from my article last summer: Even if data quality issues are identified, most organisations lack systematic processes for resolving problems (other than manual updates), meaning they continue to be pervasive within data operations and output quality is compromised.

This comes down to a lack of experts to define the issue and spend the time addressing the root causes to create long-term solutions. And then data quality becomes "everyone's problem but no one's job."

Quality initiatives are often reactive—responding to specific complaints or incidents—rather than proactive and systematic. Without a dedicated team or established methodology for addressing quality issues, organisations find themselves in an endless cycle of firefighting.

Companies are investing in data quality tools, but there needs to be cross-functional coordination between business, engineering, governance and (if they have them) data management teams to ensure the implementation addresses those root issues.

I’d say 50-60% of companies I’ve seen don’t have a data quality team or approach to addressing it. It is usually handed off in tickets to engineers, analysts or a random person who spends 5 hours a week manually fixing the report in Excel.

5. Tackling Issues with Band-Aid, Manual Fixes or Hiring Further Headcount

Again, we go back to the historical and psychological blocker that hinders progress with data quality… When faced with data quality problems, the typical organisational response is either quick manual fixes or throwing more people at the problem. Without the expertise to fundamentally diagnose the underlying technology/ data issues and assess the people/ process shortcomings, most solutions will fall short.

And in the end, you get manual interventions—like one-off data corrections or dashboard adjustments—that provide temporary relief but do nothing to prevent the same problems from recurring. Or organisations hire more analysts or engineers might help manage the symptoms.

This approach is short-term over long-term. And as we all know, most organisations have a short-term mindset. That being said, some companies have built their data platforms on very strong foundations, which proactively counter these issues from occurring. I would say, from my experience, these companies tend to be data-led organisations that see the benefit in investing in long-term data/ technology success

Despite all these things, I do see a few rays of light shining through. One is the platform approach to data quality tooling, while the other is the potential of AI to help with these issues.

The Platform Approach to Data Quality

Today, every data tooling company takes a platform approach. I know, because I have researched them all and every website talks about their full suite of tooling addressing every part of the data quality journey.

Despite the sea of sameness marketing, this ‘platform approach’ was adopted for a reason and represents a beneficial evolution of data quality tooling.

Given all that we have discussed above, it does not make sense to address data quality in isolation.

Data flows through numerous systems, undergoes multiple transformations, and should be cross-functional in nature. No doubt that the tools to ensure its quality should also take that approach?

Moreover, companies are hesitant to invest in data quality. Therefore, asking a CFO to sign off on an investment for three tools that are all working to solve the same issue doesn’t make sense (you try explaining the difference between data governance and observability to a finance person).

With the recognition that data quality is not a single problem to be solved but a continuous process that must be embedded throughout the data ecosystem, what does that ultimately boil down to?

Well, I’ve broken it down into a three-pronged approach:

Detection

Enforcement

Ownership

The following three articles will delve into each of these areas in more detail, but I would like to provide a high-level explanation of what they are and how they fit into an overall data quality platform approach.

I should also note that this approach doesn’t encompass the many other aspects that enable a strong data quality foundation, such as a well-built technology architecture, business-focused data modelling, and clear operational principles for working with data. Remember, everything in the data ecosystem needs to be considered holistically, so think about secondary elements when evaluating data quality as well.

Detection (Observability) – Starting with detection makes sense. Why? Well, the problem usually starts with “we found these errors in the data.” The solution in data quality, therefore, started with “let’s automate the process of finding these errors.” This is why most data quality platforms started as Data Observability tools. They focus on providing visibility into what's happening with your data at all times. If something seems off, the right teams (downstream analytics or upstream engineers) are alerted to the issue, allowing them to go and fix it. With a platform embedded across data and technology tools, organisations can continuously monitor their entire data ecosystem to detect problems as they emerge.

Enforcement (Contracts & Testing) – Contracts are the biggest buzzword in the data quality world right now. It is all based on the premise of shifting left and is the next obvious step from detection. So while observability tells you when something is wrong, enforcement mechanisms like data contracts or pipeline testing focus on prevention. Contracts help establish formal agreements between data producers and consumers, clearly defining expectations for data structure, semantics, and quality. Testing then complements contracts by verifying that data meets defined quality standards. Modern data quality platforms often include both, incorporating contracts into the data they monitor and test throughout the data lifecycle. Add it to the observability piece above, and you have a strong foundation for proactively preventing and identifying any data issues.

Ownership (Governance & Catalogues) – Data ownership isn’t embedded into all data quality platforms because a lot of this falls into the Data Governance category. Contracts can often stipulate ownership, but only a proper governance strategy, with clear roles and responsibilities, business alignment, and data standards, can work across teams and bridge the business-data gap. Effective governance establishes the organisational framework to enable quality over time with the right people and processes. That being said, many data quality platforms either include or integrate with Data Catalogues, which provide context for datasets, increase discoverability for business teams, and, of course, establish accountability for datasets. By integrating governance with technical quality measures, platform approaches can ensure that quality initiatives and automation are linked to existing governance standards and rules, thereby ensuring that data remains aligned with the business needs for it beyond the platform’s initial implementation.

AI's Role in Data Quality

I decided I can’t write this article without mentioning AI (especially since I just wrote a six-part series on it) and its role in data quality.

Artificial intelligence brings a divergent perspective on data quality.

On one hand, AI allows companies to work with imperfect data in ways traditional systems cannot. For example, AI systems can:

Identify patterns in noisy data that would be otherwise difficult to detect

Suggest corrections for common quality issues based on learned patterns and contexts

Automate routine quality tasks like deduplication, standardisation, and anomaly detection

Adapt to changing data characteristics without requiring manual reconfiguration

With so many organisations still relying on manual data quality fixes, these capabilities are a breath of fresh air, enabling them to improve quality at scale and address issues that would be impractical to handle manually. Not to mention, with data quality platforms and tools embedding these AI approaches, this saves engineering time spent trying to build them into existing data platforms and systems.

However, despite these automation gains, AI is not a magic solution— and you should never listen to a company that claims it is. Much of data quality is not about technical fixes, but rather the human context. For AI to effectively contribute to data quality, it requires proper context about the data it is working with. This includes:

Domain knowledge about what the data represents and how it's used

Business rules that might not be apparent from the data itself

Relationship information between different data elements and systems (cough data modelling cough)

Quality expectations that define what "good" looks like in specific contexts

Without this context, AI systems may make statistically valid but business-invalid assumptions. These inaccuracies might create question marks in stakeholders’ minds and, ultimately, reduce trust in data and AI.

The last thing to mention is that while AI presents an opportunity, it could also exacerbate the existing business-data gap that we see in most organisations. Why? Because AI is a black box!

Unlike traditional quality rules that have clear logic and can be explained by a human, AI systems learn from the data, making decisions based on complex models that are difficult to interpret. I mean, Sam Altman has even admitted that OpenAI doesn’t understand how its AI works, so how are business stakeholders going to know how some random AI quality tool or model works? Companies need to not just jump into bed with AI-enabled data quality tools, they instead have to consider the implications like:

Explainability of how the quality decisions are made and what the logic is

Bias detection and prevention to account for hallucinations and unequal data availability

Validation frameworks need to verify that AI corrections align with business expectations

Governance processes with humans-in-the-loop to help translate and handle the complexity of AI-based quality systems

Organisations adopting AI for data quality must balance the technology's power with appropriate controls. This means investing not just in AI capabilities but in the frameworks to govern them effectively.

As AI continues to evolve, it will likely become an integral part of data quality management—not replacing human judgment and oversight, but augmenting it to handle the scale and complexity of modern data environments.

To be fair, going back to my historical and psychological blockers to data quality, AI does present a pretty unique solution. With enough context and oversight, AI can make data quality automation a new norm, while providing the right expertise to understand and deal with root causes of data quality issues rather than just treat the symptoms

The key will be developing approaches that leverage AI's strengths while mitigating its limitations.

The Data Quality Journey Continues…

The reason I wrote this article (even after addressing data quality in two articles last summer) is that the topic just keeps resurfacing. Data quality remains one of the most persistent challenges in the Data Ecosystem, and I don’t foresee that going away.

So after this introduction of data quality in today's complex data world, we have three articles that will go into popular approaches to solve for it. First, we dive into Detection with an article on Data Observability. Here, we will discuss how the most popular data quality domain has evolved and how observability tools now enable companies to be more proactive in detecting issues. Secondly, we get into Enforcement. Everybody is talking about shifting left, with data contracts and testing taking the lead. Third, we will start our venture into Ownership. Now, there will be subsequent articles on Data Governance (a huge topic), but this will introduce us to this critical topic that needs further discussion.

As always, thanks for reading! Subscribe, comment, and have a great Monday (Easter Sunday made me post today)!

Thanks for the read! Comment below and share the newsletter/ issue if you think it is relevant! Feel free to also follow me on LinkedIn (very active) or Medium (increasingly active). And if you are interested in consulting, please do reach out. See you amazing folks next week!

Another question about data that is seldom asked is, "How much quality is 'good enough?'" There are many existing data stores that can never be fully accurate. An example is the number of active service lines managed by a phone company. Several years ago, a project related to assigning equipment for phone service identified six sources, each of which was treated as accurate by individual business processes - billing, service, provisioning (assigning equipment), engineering, finance, and sales/marketing. Each of these had a different number of active lines and the range exceeded 24,000. Could these differences be reduced? Maybe, but in the time required to gather detailed information in the company's central offices (where the network switches live), the number will have changed as new customers are added and existing customers leave. In addition, it's not clear that any connected circuit is really active (it may be connected but it's not used or billed). How much is the gap costing the company? Clearly, it's in the company's interest to have some number of inactive service lines in order to provide service quickly to customers. In situations like this, the cost to achieve a defined level of data quality may be greater than the benefit gained through improved management of idle equipment. So how do we figure out if the quality of data is "good enough?"